As a long-time tech blogger, I constantly encounter emerging AI technologies that spark my curiosity. Recently, I stumbled upon Gemma LLM, a project from Google DeepMind that sent my excitement levels are high.

Gemma isn’t just another large language model (LLMs), like ChatGPT, Bard It is a game-changer, offering open-source access to a powerful language processing tool.

But what exactly is Gemma, and how does it stack up against other LLMs?

In this article, we will explore this powerful Gemma LLM model and its capabilities.

What is LLM in generative AI?

Before diving into Gemma, let’s see about the LLMs.

LLM stands for Large Language Model. It’s a type of artificial intelligence (AI) system trained on massive amounts of text datasets by machine learning, deep learning, and natural language processing (NLP) techniques.

This training allows them to understand and generate human-like language, making them capable of various tasks like:

- Text generation

- Question answering

- Translation

- Summarize long text.

Our AI-based text summarizer tool is made with a refined LLM.

What is Google’s Gemma LLM?

Gemma is a lightweight, state-of-the-art, open-source family of models developed by Google DeepMind and other Google teams. This LLM model is designed on the same research and technology behind the “Gemini“ family of LLMs and can be fine-tuned for a variety of business use cases.

Gemma is a family of open-source, state-of-the-art LLMs designed to be accessible and easy to use.

Drawing inspiration from the Latin word “gemma” (i.e., precious stone), the models aim to empower developers with tools that facilitate innovation, collaboration, and responsible usage.

It’s a set of lightweight, generative AI models that are small enough to run on a laptop.

Gemma is currently available worldwide. 🌍

Gemma open Models

Gemma AI model utilizes a decoder-only transformer architecture, focusing on text generation tasks. This means it analyzes and processes input text to generate meaningful and relevant outputs, similar to how humans communicate.

Google has released model weights in two sizes, Gemma 2B and Gemma 7B.

The two model sizes offered are:

- Gemma 2B: This model boasts 2 billion parameters, making it a lightweight option suitable for deployment on resource-constrained environments like laptops or mobile devices.

- Gemma 7B: This larger model contains 7 billion parameters, offering enhanced performance for tasks requiring more computational power.

Gemma models come in two variants:

- Pre-trained models: These models are trained on a massive dataset of text and code, allowing them to perform various tasks like text summarization, translation, and question answering.

- Instruction-tuned models: These models undergo an additional fine-tuning process where they are trained on specific instructions or datasets, tailoring them to perform specialized tasks such as writing different creative text formats or specific coding functionalities.

Key Technical Aspects:

- Low-Rank Adaptation (LoRA): This technique allows fine-tuning Gemma models with minimal additional parameters, making them more efficient and easier to adapt for various tasks.

- Multi-framework support: Gemma models can be used across popular deep learning frameworks like TensorFlow, PyTorch, JAX, and Hugging Face Transformers, offering flexibility for developers.

- Cross-device compatibility: These models can run on various devices, including CPUs, GPUs, and TPUs, enabling deployment on different hardware configurations.

How to use the Gemma AI Model?

It is ready to use with Colab and Kaggle notebooks, alongside integration with popular AI framework tools such as Hugging Face, NVIDIA NeMo, MaxText, and TensorRT-LLM, making it easy to get started with Gemma.

1. Accessing the Model:

- Model Selection: Choose the appropriate model size (2B or 7B) and variant (pre-trained or instruction-tuned) based on your task and available resources.

2. Setting Up the Environment:

- Hardware and Software: Ensure you have a compatible computer with Python 3.10 or higher and libraries like JAX installed.

- Downloading the Model: Once you’ve selected the model, follow the download instructions provided by Google.

3. Using the Model:

- Code Libraries: Depending on your chosen framework (e.g., JAX, TensorFlow, PyTorch), utilize the provided code libraries and examples to interact with the model.

- Input and Output: Prepare your input text and configure the model parameters like the desired output length.

- Running the Model: Execute the code to run the model and generate the desired output.

🌟 Remember, using Gemma LLM effectively requires technical expertise in deep learning and experience with code.

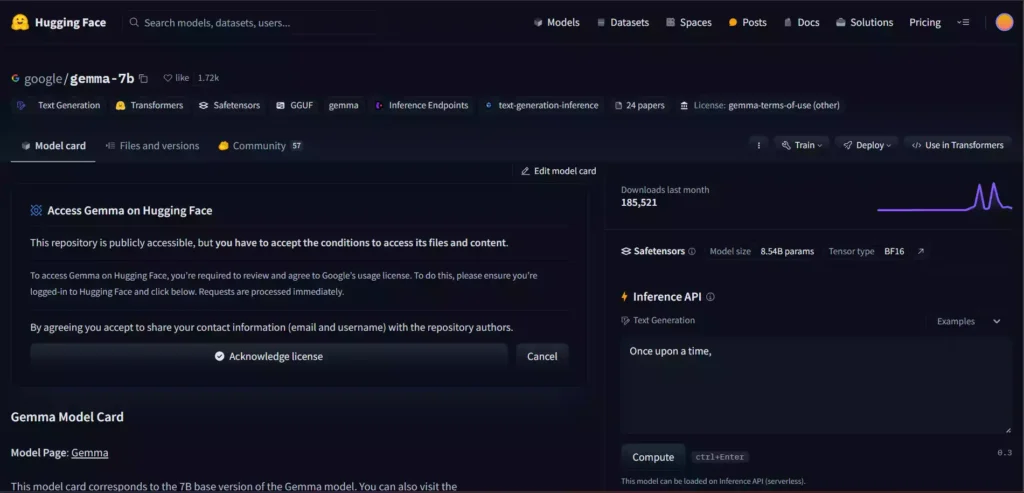

Gemma Model HuggingFace Community

Google emphasizes responsible use of their models and provides frameworks enabling ethical development. Integrating these frameworks into the Hugging Face platform might be complex but easy to use.

You can run the Gemma model on the Hugging Face platform.

| Model Name | Link |

|---|---|

| 1. google/gemma-7b | Access Here |

| 2. google/gemma-7b-it | Access Here |

| 3. google/gemma-2b | Access Here |

| 4. google/gemma-2b | Access Here |

It remains a valuable resource for AI exploration. Remember to access and utilize it responsibly according to Google’s guidelines.

What are the potential applications of Gemma LLM?

There are so many possibilities for using the Gemma LLM model in real applications.

- Text generation (creative writing, code generation)

- Language Translation

- Question answering

- Chatbots

- Data analysis and exploration

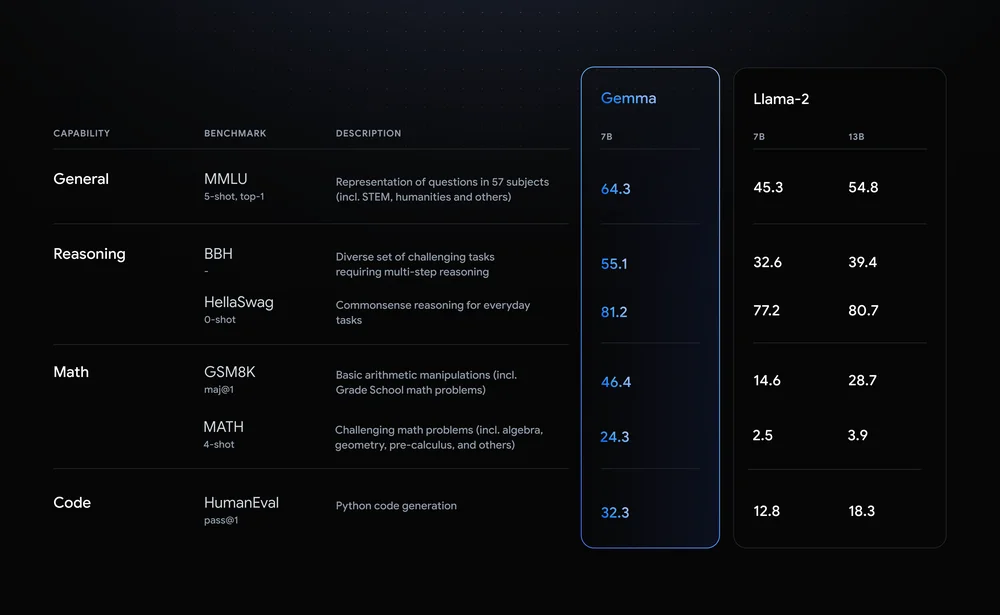

Gemma LLM performance Insights

Gemma LLM, with its accessible and efficient design, presents exciting possibilities for various NLP tasks. While still relatively new, its performance holds promise across several areas:

Benchmark Performance:

- Competitive: Compared to other lightweight LLMs, Gemma demonstrates competitive performance on standard NLP benchmarks like SQuAD (question answering) and LAMBADA (language understanding).

- Task-specific variations: Performance can vary depending on the specific task and chosen model variant (pre-trained vs. instruction-tuned). Instruction-tuned models often exhibit better performance on tasks they are specifically trained for.

Conclusion

Gemma LLM is a significant advancement in AI model from Google Deepmind. It offers open-source, accessible, lightweight and powerful language models.

In this article, we have explored and understood the potential of Google’s latest LLM model.

I can say Gemma has the potential to democratize AI, boosting innovation and shaping the future of various industries and research areas.